- Helm install pod in pending state:

When you execute kubectl get events you will see the following error:

no persistent volumes available for this claim and no storage class is set or

PersistentVolumeClaim is not bound

This error usually comes in kubernetes set with kubeadm.

You will need to create persistentvolume with the following yaml file:kind: PersistentVolume apiVersion: v1 metadata: name: redis-data labels: type: local spec: storageClassName: generic capacity: storage: 8Gi accessModes: - ReadWriteOnce hostPath: path: "/bitnami/redis"create pv with kubectl create -f pv-create.ymlThen you will need to create pvc with following yaml

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: redis-data spec: storageClassName: generic accessModes: - ReadWriteOnce resources: requests: storage: 8GiYou will need to create pvc with kubectl create -f pv-claim.ymlCheck the pvc status with kubectl get pvc with status should be bound.

Installing Kubernetes 1.8.1 on centos 7 with flannel

Prerequisites:-

You should have at least two VMs (1 master and 1 slave) with you before creating cluster in order to test full functionality of k8s.

1] Master :-

Minimum of 1 Gb RAM, 1 CPU core and 50 Gb HDD ( suggested )

2] Slave :-

Minimum of 1 Gb RAM, 1 CPU core and 50 Gb HDD ( suggested )

3] Also, make sure of following things.

- Network interconnectivity between VMs.

- hostnames

- Prefer to give Static IP.

- DNS entries

- Disable SELinux

$ vi /etc/selinux/config

- Disable and stop firewall. ( If you are not familiar with firewall )

$ systemctl stop firewalld

$ systemctl disable firewalld

Following steps creates k8s cluster on the above VMs using kubeadm on centos 7.

Step 1] Installing kubelet and kubeadm on all your hosts

$ ARCH=x86_64

$ cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-${ARCH}

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg

https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

$ setenforce 0

$ yum install -y docker kubelet kubeadm kubectl kubernetes-cni

$ systemctl enable docker && systemctl start docker

$ systemctl enable kubelet && systemctl start kubelet

You might have an issue where the kubelet service does not start. You can see the error in /var/log/messages: If you have an error as follows:

Oct 16 09:55:33 k8s-master kubelet: error: unable to load client CA file /etc/kubernetes/pki/ca.crt: open /etc/kubernetes/pki/ca.crt: no such file or directory

Oct 16 09:55:33 k8s-master systemd: kubelet.service: main process exited, code=exited, status=1/FAILURE

Then you will have to initialize the kubeadm first as in the next step. And the start the kubelet service.

Step 2.1] Initializing your master

$ kubeadm init

Note:-

- execute above command on master node. This command will select one of interface to be used as API server. If you wants to provide another interface please provide “–apiserver-advertise-address=<ip-address>” as an argument. So the whole command will be like this-

$ kubeadm init –apiserver-advertise-address=<ip-address>

- K8s has provided flexibility to use network of your choice like flannel, calico etc. I am using flannel network. For flannel network we need to pass network CIDR explicitly. So now the whole command will be-

$ kubeadm init –apiserver-advertise-address=<ip-address> –pod-network-cidr=10.244.0.0/16

Exa:- $ kubeadm init –apiserver-advertise-address=172.31.14.55 –pod-network-cidr=10.244.0.0/16

Step 2.2] Start using cluster

$ sudo cp /etc/kubernetes/admin.conf $HOME/

$ sudo chown $(id -u):$(id -g) $HOME/admin.conf

$ export KUBECONFIG=$HOME/admin.conf

-> Use same network CIDR as it is also configured in yaml file of flannel that we are going to configure in step 3.

-> At the end you will get one token along with the command, make a note of it, which will be used to join the slaves.

Step 3] Installing a pod network

Different networks are supported by k8s and depends on user choice. For this demo I am using flannel network. As of k8s-1.6, cluster is more secure by default. It uses RBAC ( Role Based Access Control ), so make sure that the network you are going to use has support for RBAC and k8s-1.6.

- Create RBAC Pods :

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/k8s-manifests/kube-flannel-rbac.yml

Check whether pods are creating or not :

$ kubectl get pods –all-namespaces

- Create Flannel pods :

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Check whether pods are creating or not :

$ kubectl get pods –all-namespaces -o wide

-> at this stage all your pods should be in running state.

-> option “-o wide” will give more details like IP and slave where it is deployed.

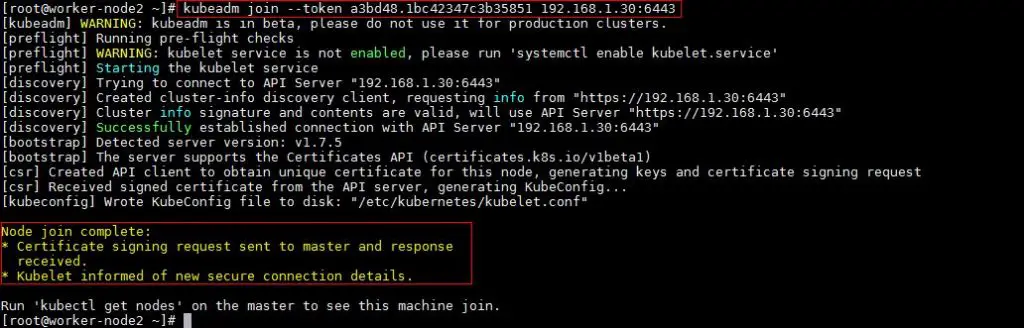

Step 4] Joining your nodes

SSH to slave and execute following command to join the existing cluster.

$ kubeadm join –token <token> <master-ip>:<master-port>

You might also have an ca-cert-hash make sure you copy the entire join command from the init output to join the nodes.

Go to master node and see whether new slave has joined or not as-

-> If slave is not ready, wait for few seconds, new slave will join soon.

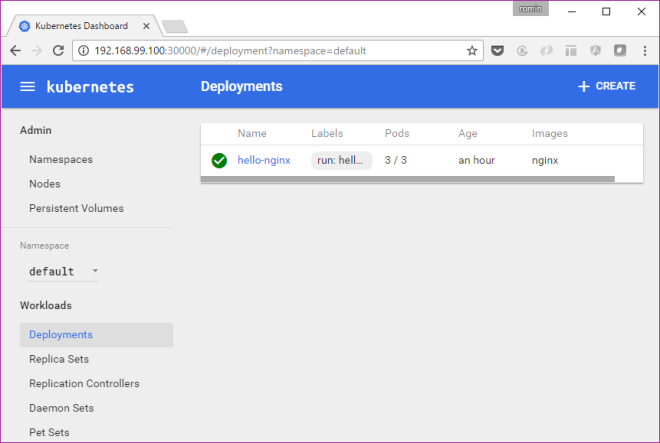

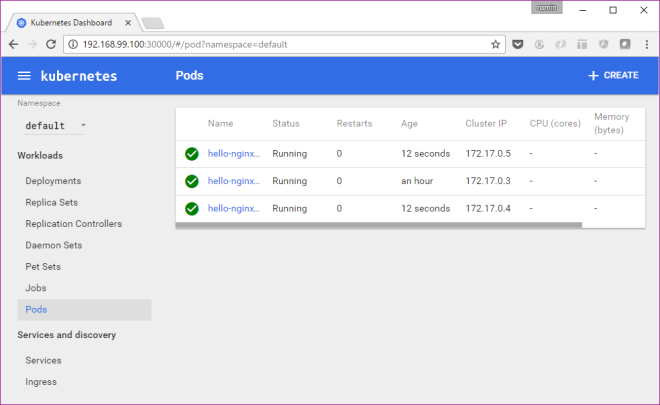

Step 5] Verify your cluster by running sample nginx application

$ vi sample_nginx.yaml

———————————————

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 2 # tells deployment to run 2 pods matching the template

template: # create pods using pod definition in this template

metadata:

# unlike pod-nginx.yaml, the name is not included in the meta data as a unique name is

# generated from the deployment name

labels:

app: nginx

spec:

containers:

– name: nginx

image: nginx:1.7.9

ports:

– containerPort: 80

——————————————————

$ kubectl create -f sample_nginx.yaml

Verify pods are getting created or not.

$ kubectl get pods

$ kubectl get deployments

Now , lets expose the deployment so that the service will be accessible to other pods in the cluster.

$ kubectl expose deployment nginx-deployment –name=nginx-service –port=80 –target-port=80 –type=NodePort

Above command will create service with the name “nginx-service”. Service will be accessible on the port given by “–port” option for the “–target-port”. Target port will be of pod. Service will be accessible within the cluster only. In order to access it using your host IP “NodePort” option will be used.

–type=NodePort :- when this option is given k8s tries to find out one of free port in the range 30000-32767 on all the VMs of the cluster and binds the underlying service with it. If no such port found then it will return with an error.

Check service is created or not

$ kubectl get svc

Try to curl –

$ curl <service-IP> 80

From all the VMs including master. Nginx welcome page should be accessible.

$ curl <master-ip> nodePort

$ curl <slave-IP> nodePort

Execute this from all the VMs. Nginx welcome page should be accessible.

Also, Access nginx home page by using browser.

Helm: Installation and Configuration

PREREQUISITES

- You must have Kubernetes installed. We recommend version 1.4.1 or later.

- You should also have a local configured copy of

kubectl.

Helm will figure out where to install Tiller by reading your Kubernetes configuration file (usually $HOME/.kube/config). This is the same file that kubectl uses.

To find out which cluster Tiller would install to, you can run kubectl config current-contextor kubectl cluster-info.

$ kubectl config current-context

my-cluster

INSTALL HELM

Download a binary release of the Helm client. You can use tools like homebrew, or look at the official releases page.

For more details, or for other options, see the installation guide.

INITIALIZE HELM AND INSTALL TILLER

Once you have Helm ready, you can initialize the local CLI and also install Tiller into your Kubernetes cluster in one step:

$ helm init

This will install Tiller into the Kubernetes cluster you saw with kubectl config current-context.

TIP: Want to install into a different cluster? Use the --kube-context flag.

TIP: When you want to upgrade Tiller, just run helm init --upgrade.

INSTALL AN EXAMPLE CHART

To install a chart, you can run the helm install command. Helm has several ways to find and install a chart, but the easiest is to use one of the official stable charts.

$ helm repo update # Make sure we get the latest list of charts

$ helm install stable/mysql

Released smiling-penguin

In the example above, the stable/mysql chart was released, and the name of our new release is smiling-penguin. You get a simple idea of the features of this MySQL chart by running helm inspect stable/mysql.

Whenever you install a chart, a new release is created. So one chart can be installed multiple times into the same cluster. And each can be independently managed and upgraded.

The helm install command is a very powerful command with many capabilities. To learn more about it, check out the Using Helm Guide

LEARN ABOUT RELEASES

It’s easy to see what has been released using Helm:

$ helm ls

NAME VERSION UPDATED STATUS CHART

smiling-penguin 1 Wed Sep 28 12:59:46 2016 DEPLOYED mysql-0.1.0

The helm list function will show you a list of all deployed releases.

UNINSTALL A RELEASE

To uninstall a release, use the helm delete command:

$ helm delete smiling-penguin

Removed smiling-penguin

This will uninstall smiling-penguin from Kubernetes, but you will still be able to request information about that release:

$ helm status smiling-penguin

Status: DELETED

...

Because Helm tracks your releases even after you’ve deleted them, you can audit a cluster’s history, and even undelete a release (with helm rollback).

READING THE HELP TEXT

To learn more about the available Helm commands, use helm help or type a command followed by the -h flag:

$ helm get -h

+++ aliases = [ “install.md”, “docs/install.md”, “using_helm/install.md”, “developing_charts/install.md” ] +++

Installing Helm

There are two parts to Helm: The Helm client (helm) and the Helm server (Tiller). This guide shows how to install the client, and then proceeds to show two ways to install the server.

INSTALLING THE HELM CLIENT

The Helm client can be installed either from source, or from pre-built binary releases.

From the Binary Releases

Every release of Helm provides binary releases for a variety of OSes. These binary versions can be manually downloaded and installed.

- Download your desired version

- Unpack it (

tar -zxvf helm-v2.0.0-linux-amd64.tgz) - Find the

helmbinary in the unpacked directory, and move it to its desired destination (mv linux-amd64/helm /usr/local/bin/helm)

From there, you should be able to run the client: helm help.

From Homebrew (macOS)

Members of the Kubernetes community have contributed a Helm formula build to Homebrew. This formula is generally up to date.

brew install kubernetes-helm

(Note: There is also a formula for emacs-helm, which is a different project.)

FROM SCRIPT

Helm now has an installer script that will automatically grab the latest version of the Helm client and install it locally.

You can fetch that script, and then execute it locally. It’s well documented so that you can read through it and understand what it is doing before you run it.

$ curl https://raw.githubusercontent.com/kubernetes/helm/master/scripts/get > get_helm.sh

$ chmod 700 get_helm.sh

$ ./get_helm.sh

Yes, you can curl https://raw.githubusercontent.com/kubernetes/helm/master/scripts/get | bash that if you want to live on the edge.

From Canary Builds

“Canary” builds are versions of the Helm software that are built from the latest master branch. They are not official releases, and may not be stable. However, they offer the opportunity to test the cutting edge features.

Canary Helm binaries are stored in the Kubernetes Helm GCS bucket. Here are links to the common builds:

From Source (Linux, macOS)

Building Helm from source is slightly more work, but is the best way to go if you want to test the latest (pre-release) Helm version.

You must have a working Go environment with glide and Mercurial installed.

$ cd $GOPATH

$ mkdir -p src/k8s.io

$ cd src/k8s.io

$ git clone https://github.com/kubernetes/helm.git

$ cd helm

$ make bootstrap build

The bootstrap target will attempt to install dependencies, rebuild the vendor/ tree, and validate configuration.

The build target will compile helm and place it in bin/helm. Tiller is also compiled, and is placed in bin/tiller.

INSTALLING TILLER

Tiller, the server portion of Helm, typically runs inside of your Kubernetes cluster. But for development, it can also be run locally, and configured to talk to a remote Kubernetes cluster.

Easy In-Cluster Installation

The easiest way to install tiller into the cluster is simply to run helm init. This will validate that helm’s local environment is set up correctly (and set it up if necessary). Then it will connect to whatever cluster kubectl connects to by default (kubectl config view). Once it connects, it will install tiller into the kube-system namespace.

After helm init, you should be able to run kubectl get pods --namespace kube-systemand see Tiller running.

You can explicitly tell helm init to…

- Install the canary build with the

--canary-imageflag - Install a particular image (version) with

--tiller-image - Install to a particular cluster with

--kube-context - Install into a particular namespace with

--tiller-namespace

Once Tiller is installed, running helm version should show you both the client and server version. (If it shows only the client version, helm cannot yet connect to the server. Use kubectl to see if any tiller pods are running.)

Helm will look for Tiller in the kube-system namespace unless --tiller-namespace or TILLER_NAMESPACE is set.

Installing Tiller Canary Builds

Canary images are built from the master branch. They may not be stable, but they offer you the chance to test out the latest features.

The easiest way to install a canary image is to use helm init with the --canary-image flag:

$ helm init --canary-image

This will use the most recently built container image. You can always uninstall Tiller by deleting the Tiller deployment from the kube-system namespace using kubectl.

Running Tiller Locally

For development, it is sometimes easier to work on Tiller locally, and configure it to connect to a remote Kubernetes cluster.

The process of building Tiller is explained above.

Once tiller has been built, simply start it:

$ bin/tiller

Tiller running on :44134

When Tiller is running locally, it will attempt to connect to the Kubernetes cluster that is configured by kubectl. (Run kubectl config view to see which cluster that is.)

You must tell helm to connect to this new local Tiller host instead of connecting to the one in-cluster. There are two ways to do this. The first is to specify the --host option on the command line. The second is to set the $HELM_HOST environment variable.

$ export HELM_HOST=localhost:44134

$ helm version # Should connect to localhost.

Client: &version.Version{SemVer:"v2.0.0-alpha.4", GitCommit:"db...", GitTreeState:"dirty"}

Server: &version.Version{SemVer:"v2.0.0-alpha.4", GitCommit:"a5...", GitTreeState:"dirty"}

Importantly, even when running locally, Tiller will store release configuration in ConfigMaps inside of Kubernetes.

UPGRADING TILLER

As of Helm 2.2.0, Tiller can be upgraded using helm init --upgrade.

For older versions of Helm, or for manual upgrades, you can use kubectl to modify the Tiller image:

$ export TILLER_TAG=v2.0.0-beta.1 # Or whatever version you want

$ kubectl --namespace=kube-system set image deployments/tiller-deploy tiller=gcr.io/kubernetes-helm/tiller:$TILLER_TAG

deployment "tiller-deploy" image updated

Setting TILLER_TAG=canary will get the latest snapshot of master.

DELETING OR REINSTALLING TILLER

Because Tiller stores its data in Kubernetes ConfigMaps, you can safely delete and re-install Tiller without worrying about losing any data. The recommended way of deleting Tiller is with kubectl delete deployment tiller-deploy --namespace kube-system, or more concisely helm reset.

Tiller can then be re-installed from the client with:

$ helm init

CONCLUSION

In most cases, installation is as simple as getting a pre-built helm binary and running helm init. This document covers additional cases for those who want to do more sophisticated things with Helm.

Once you have the Helm Client and Tiller successfully installed, you can move on to using Helm to manage charts.

+++ aliases = [ “kubernetes_distros.md”, “docs/kubernetes_distros.md”, “using_helm/kubernetes_distros.md”, “developing_charts/kubernetes_distros.md” ] +++

Kubernetes Distribution Guide

This document captures information about using Helm in specific Kubernetes environments.

We are trying to add more details to this document. Please contribute via Pull Requests if you can.

MINIKUBE

Helm is tested and known to work with minikube. It requires no additional configuration.

SCRIPTS/LOCAL-CLUSTER AND HYPERKUBE

Hyperkube configured via scripts/local-cluster.sh is known to work. For raw Hyperkube you may need to do some manual configuration.

GKE

Google’s GKE hosted Kubernetes platform is known to work with Helm, and requires no additional configuration.

UBUNTU WITH ‘KUBEADM’

Kubernetes bootstrapped with kubeadm is known to work on the following Linux distributions:

- Ubuntu 16.04

- CAN SOMEONE CONFIRM ON FEDORA?

Some versions of Helm (v2.0.0-beta2) require you to export KUBECONFIG=/etc/kubernetes/admin.conf or create a ~/.kube/config.

CONTAINER LINUX BY COREOS

Helm requires that kubelet have access to a copy of the socat program to proxy connections to the Tiller API. On Container Linux the Kubelet runs inside of a hyperkube container image that has socat. So, even though Container Linux doesn’t ship socat the container filesystem running kubelet does have socat. To learn more read the Kubelet Wrapper docs.

+++ aliases = [ “install_faq.md”, “docs/install_faq.md”, “using_helm/install_faq.md”, “developing_charts/install_faq.md” ] +++

Installation: Frequently Asked Questions

This section tracks some of the more frequently encountered issues with installing or getting started with Helm.

We’d love your help making this document better. To add, correct, or remove information, file an issue or send us a pull request.

DOWNLOADING

I want to know more about my downloading options.

Q: I can’t get to GitHub releases of the newest Helm. Where are they?

A: We no longer use GitHub releases. Binaries are now stored in a GCS public bucket.

Q: Why aren’t there Debian/Fedora/… native packages of Helm?

We’d love to provide these or point you toward a trusted provider. If you’re interested in helping, we’d love it. This is how the Homebrew formula was started.

Q: Why do you provide a curl ...|bash script?

A: There is a script in our repository (scripts/get) that can be executed as a curl ..|bashscript. The transfers are all protected by HTTPS, and the script does some auditing of the packages it fetches. However, the script has all the usual dangers of any shell script.

We provide it because it is useful, but we suggest that users carefully read the script first. What we’d really like, though, are better packaged releases of Helm.

INSTALLING

I’m trying to install Helm/Tiller, but something is not right.

Q: How do I put the Helm client files somewhere other than ~/.helm?

Set the $HELM_HOME environment variable, and then run helm init:

export HELM_HOME=/some/path

helm init --client-only

Note that if you have existing repositories, you will need to re-add them with helm repo add....

Q: How do I configure Helm, but not install Tiller?

A: By default, helm init will ensure that the local $HELM_HOME is configured, and then install Tiller on your cluster. To locally configure, but not install Tiller, use helm init --client-only.

Q: How do I manually install Tiller on the cluster?

A: Tiller is installed as a Kubernetes deployment. You can get the manifest by running helm init --dry-run --debug, and then manually install it with kubectl. It is suggested that you do not remove or change the labels on that deployment, as they are sometimes used by supporting scripts and tools.

Q: Why do I get Error response from daemon: target is unknown during Tiller install?

A: Users have reported being unable to install Tiller on Kubernetes instances that are using Docker 1.13.0. The root cause of this was a bug in Docker that made that one version incompatible with images pushed to the Docker registry by earlier versions of Docker.

This issue was fixed shortly after the release, and is available in Docker 1.13.1-RC1 and later.

GETTING STARTED

I successfully installed Helm/Tiller but I can’t use it.

Q: Trying to use Helm, I get the error “client transport was broken”

E1014 02:26:32.885226 16143 portforward.go:329] an error occurred forwarding 37008 -> 44134: error forwarding port 44134 to pod tiller-deploy-2117266891-e4lev_kube-system, uid : unable to do port forwarding: socat not found.

2016/10/14 02:26:32 transport: http2Client.notifyError got notified that the client transport was broken EOF.

Error: transport is closing

A: This is usually a good indication that Kubernetes is not set up to allow port forwarding.

Typically, the missing piece is socat. If you are running CoreOS, we have been told that it may have been misconfigured on installation. The CoreOS team recommends reading this:

Here are a few resolved issues that may help you get started:

Q: Trying to use Helm, I get the error “lookup XXXXX on 8.8.8.8:53: no such host”

Error: Error forwarding ports: error upgrading connection: dial tcp: lookup kube-4gb-lon1-02 on 8.8.8.8:53: no such host

A: We have seen this issue with Ubuntu and Kubeadm in multi-node clusters. The issue is that the nodes expect certain DNS records to be obtainable via global DNS. Until this is resolved upstream, you can work around the issue as follows:

1) Add entries to /etc/hosts on the master mapping your hostnames to their public IPs 2) Install dnsmasq on the master (e.g. apt install -y dnsmasq) 3) Kill the k8s api server container on master (kubelet will recreate it) 4) Then systemctl restart docker (or reboot the master) for it to pick up the /etc/resolv.conf changes

See this issue for more information: https://github.com/kubernetes/helm/issues/1455

Q: On GKE (Google Container Engine) I get “No SSH tunnels currently open”

Error: Error forwarding ports: error upgrading connection: No SSH tunnels currently open. Were the targets able to accept an ssh-key for user "gke-[redacted]"?

Another variation of the error message is:

Unable to connect to the server: x509: certificate signed by unknown authority

A: The issue is that your local Kubernetes config file must have the correct credentials.

When you create a cluster on GKE, it will give you credentials, including SSL certificates and certificate authorities. These need to be stored in a Kubernetes config file (Default: ~/.kube/config so that kubectl and helm can access them.

Q: When I run a Helm command, I get an error about the tunnel or proxy

A: Helm uses the Kubernetes proxy service to connect to the Tiller server. If the command kubectl proxy does not work for you, neither will Helm. Typically, the error is related to a missing socat service.

Q: Tiller crashes with a panic

When I run a command on Helm, Tiller crashes with an error like this:

Tiller is listening on :44134

Probes server is listening on :44135

Storage driver is ConfigMap

Cannot initialize Kubernetes connection: the server has asked for the client to provide credentials 2016-12-20 15:18:40.545739 I | storage.go:37: Getting release "bailing-chinchilla" (v1) from storage

panic: runtime error: invalid memory address or nil pointer dereference

[signal SIGSEGV: segmentation violation code=0x1 addr=0x0 pc=0x8053d5]

goroutine 77 [running]:

panic(0x1abbfc0, 0xc42000a040)

/usr/local/go/src/runtime/panic.go:500 +0x1a1

k8s.io/helm/vendor/k8s.io/kubernetes/pkg/client/unversioned.(*ConfigMaps).Get(0xc4200c6200, 0xc420536100, 0x15, 0x1ca7431, 0x6, 0xc42016b6a0)

/home/ubuntu/.go_workspace/src/k8s.io/helm/vendor/k8s.io/kubernetes/pkg/client/unversioned/configmap.go:58 +0x75

k8s.io/helm/pkg/storage/driver.(*ConfigMaps).Get(0xc4201d6190, 0xc420536100, 0x15, 0xc420536100, 0x15, 0xc4205360c0)

/home/ubuntu/.go_workspace/src/k8s.io/helm/pkg/storage/driver/cfgmaps.go:69 +0x62

k8s.io/helm/pkg/storage.(*Storage).Get(0xc4201d61a0, 0xc4205360c0, 0x12, 0xc400000001, 0x12, 0x0, 0xc420200070)

/home/ubuntu/.go_workspace/src/k8s.io/helm/pkg/storage/storage.go:38 +0x160

k8s.io/helm/pkg/tiller.(*ReleaseServer).uniqName(0xc42002a000, 0x0, 0x0, 0xc42016b800, 0xd66a13, 0xc42055a040, 0xc420558050, 0xc420122001)

/home/ubuntu/.go_workspace/src/k8s.io/helm/pkg/tiller/release_server.go:577 +0xd7

k8s.io/helm/pkg/tiller.(*ReleaseServer).prepareRelease(0xc42002a000, 0xc42027c1e0, 0xc42002a001, 0xc42016bad0, 0xc42016ba08)

/home/ubuntu/.go_workspace/src/k8s.io/helm/pkg/tiller/release_server.go:630 +0x71

k8s.io/helm/pkg/tiller.(*ReleaseServer).InstallRelease(0xc42002a000, 0x7f284c434068, 0xc420250c00, 0xc42027c1e0, 0x0, 0x31a9, 0x31a9)

/home/ubuntu/.go_workspace/src/k8s.io/helm/pkg/tiller/release_server.go:604 +0x78

k8s.io/helm/pkg/proto/hapi/services._ReleaseService_InstallRelease_Handler(0x1c51f80, 0xc42002a000, 0x7f284c434068, 0xc420250c00, 0xc42027c190, 0x0, 0x0, 0x0, 0x0, 0x0)

/home/ubuntu/.go_workspace/src/k8s.io/helm/pkg/proto/hapi/services/tiller.pb.go:747 +0x27d

k8s.io/helm/vendor/google.golang.org/grpc.(*Server).processUnaryRPC(0xc4202f3ea0, 0x28610a0, 0xc420078000, 0xc420264690, 0xc420166150, 0x288cbe8, 0xc420250bd0, 0x0, 0x0)

/home/ubuntu/.go_workspace/src/k8s.io/helm/vendor/google.golang.org/grpc/server.go:608 +0xc50

k8s.io/helm/vendor/google.golang.org/grpc.(*Server).handleStream(0xc4202f3ea0, 0x28610a0, 0xc420078000, 0xc420264690, 0xc420250bd0)

/home/ubuntu/.go_workspace/src/k8s.io/helm/vendor/google.golang.org/grpc/server.go:766 +0x6b0

k8s.io/helm/vendor/google.golang.org/grpc.(*Server).serveStreams.func1.1(0xc420124710, 0xc4202f3ea0, 0x28610a0, 0xc420078000, 0xc420264690)

/home/ubuntu/.go_workspace/src/k8s.io/helm/vendor/google.golang.org/grpc/server.go:419 +0xab

created by k8s.io/helm/vendor/google.golang.org/grpc.(*Server).serveStreams.func1

/home/ubuntu/.go_workspace/src/k8s.io/helm/vendor/google.golang.org/grpc/server.go:420 +0xa3

A: Check your security settings for Kubernetes.

A panic in Tiller is almost always the result of a failure to negotiate with the Kubernetes API server (at which point Tiller can no longer do anything useful, so it panics and exits).

Often, this is a result of authentication failing because the Pod in which Tiller is running does not have the right token.

To fix this, you will need to change your Kubernetes configuration. Make sure that --service-account-private-key-file from controller-manager and --service-account-key-filefrom apiserver point to the same x509 RSA key.

UPGRADING

My Helm used to work, then I upgrade. Now it is broken.

Q: After upgrade, I get the error “Client version is incompatible”. What’s wrong?

Tiller and Helm have to negotiate a common version to make sure that they can safely communicate without breaking API assumptions. That error means that the version difference is too great to safely continue. Typically, you need to upgrade Tiller manually for this.

The Installation Guide has definitive information about safely upgrading Helm and Tiller.

The rules for version numbers are as follows:

- Pre-release versions are incompatible with everything else.

Alpha.1is incompatible withAlpha.2. - Patch revisions are compatible: 1.2.3 is compatible with 1.2.4

- Minor revisions are not compatible: 1.2.0 is not compatible with 1.3.0, though we may relax this constraint in the future.

- Major revisions are not compatible: 1.0.0 is not compatible with 2.0.0.

UNINSTALLING

I am trying to remove stuff.

Q: When I delete the Tiller deployment, how come all the releases are still there?

Releases are stored in ConfigMaps inside of the kube-system namespace. You will have to manually delete them to get rid of the record.

Q: I want to delete my local Helm. Where are all its files?

Along with the helm binary, Helm stores some files in $HELM_HOME, which is located by default in ~/.helm.

+++ aliases = [ “using_helm.md”, “docs/using_helm.md”, “using_helm/using_helm.md”, “developing_charts/using_helm.md” ] +++

Using Helm

This guide explains the basics of using Helm (and Tiller) to manage packages on your Kubernetes cluster. It assumes that you have already installed the Helm client and the Tiller server (typically by helm init).

If you are simply interested in running a few quick commands, you may wish to begin with the Quickstart Guide. This chapter covers the particulars of Helm commands, and explains how to use Helm.

THREE BIG CONCEPTS

A Chart is a Helm package. It contains all of the resource definitions necessary to run an application, tool, or service inside of a Kubernetes cluster. Think of it like the Kubernetes equivalent of a Homebrew formula, an Apt dpkg, or a Yum RPM file.

A Repository is the place where charts can be collected and shared. It’s like Perl’s CPAN archive or the Fedora Package Database, but for Kubernetes packages.

A Release is an instance of a chart running in a Kubernetes cluster. One chart can often be installed many times into the same cluster. And each time it is installed, a new release is created. Consider a MySQL chart. If you want two databases running in your cluster, you can install that chart twice. Each one will have its own release, which will in turn have its own release name.

With these concepts in mind, we can now explain Helm like this:

Helm installs charts into Kubernetes, creating a new release for each installation. And to find new charts, you can search Helm chart repositories.

‘HELM SEARCH’: FINDING CHARTS

When you first install Helm, it is preconfigured to talk to the official Kubernetes charts repository. This repository contains a number of carefully curated and maintained charts. This chart repository is named stable by default.

You can see which charts are available by running helm search:

$ helm search

NAME VERSION DESCRIPTION

stable/drupal 0.3.2 One of the most versatile open source content m...

stable/jenkins 0.1.0 A Jenkins Helm chart for Kubernetes.

stable/mariadb 0.5.1 Chart for MariaDB

stable/mysql 0.1.0 Chart for MySQL

...

With no filter, helm search shows you all of the available charts. You can narrow down your results by searching with a filter:

$ helm search mysql

NAME VERSION DESCRIPTION

stable/mysql 0.1.0 Chart for MySQL

stable/mariadb 0.5.1 Chart for MariaDB

Now you will only see the results that match your filter.

Why is mariadb in the list? Because its package description relates it to MySQL. We can use helm inspect chart to see this:

$ helm inspect stable/mariadb

Fetched stable/mariadb to mariadb-0.5.1.tgz

description: Chart for MariaDB

engine: gotpl

home: https://mariadb.org

keywords:

- mariadb

- mysql

- database

- sql

...

Search is a good way to find available packages. Once you have found a package you want to install, you can use helm install to install it.

‘HELM INSTALL’: INSTALLING A PACKAGE

To install a new package, use the helm install command. At its simplest, it takes only one argument: The name of the chart.

$ helm install stable/mariadb

Fetched stable/mariadb-0.3.0 to /Users/mattbutcher/Code/Go/src/k8s.io/helm/mariadb-0.3.0.tgz

happy-panda

Last Deployed: Wed Sep 28 12:32:28 2016

Namespace: default

Status: DEPLOYED

Resources:

==> extensions/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

happy-panda-mariadb 1 0 0 0 1s

==> v1/Secret

NAME TYPE DATA AGE

happy-panda-mariadb Opaque 2 1s

==> v1/Service

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

happy-panda-mariadb 10.0.0.70 <none> 3306/TCP 1s

Notes:

MariaDB can be accessed via port 3306 on the following DNS name from within your cluster:

happy-panda-mariadb.default.svc.cluster.local

To connect to your database run the following command:

kubectl run happy-panda-mariadb-client --rm --tty -i --image bitnami/mariadb --command -- mysql -h happy-panda-mariadb

Now the mariadb chart is installed. Note that installing a chart creates a new release object. The release above is named happy-panda. (If you want to use your own release name, simply use the --name flag on helm install.)

During installation, the helm client will print useful information about which resources were created, what the state of the release is, and also whether there are additional configuration steps you can or should take.

Helm does not wait until all of the resources are running before it exits. Many charts require Docker images that are over 600M in size, and may take a long time to install into the cluster.

To keep track of a release’s state, or to re-read configuration information, you can use helm status:

$ helm status happy-panda

Last Deployed: Wed Sep 28 12:32:28 2016

Namespace: default

Status: DEPLOYED

Resources:

==> v1/Service

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

happy-panda-mariadb 10.0.0.70 <none> 3306/TCP 4m

==> extensions/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

happy-panda-mariadb 1 1 1 1 4m

==> v1/Secret

NAME TYPE DATA AGE

happy-panda-mariadb Opaque 2 4m

Notes:

MariaDB can be accessed via port 3306 on the following DNS name from within your cluster:

happy-panda-mariadb.default.svc.cluster.local

To connect to your database run the following command:

kubectl run happy-panda-mariadb-client --rm --tty -i --image bitnami/mariadb --command -- mysql -h happy-panda-mariadb

The above shows the current state of your release.

Customizing the Chart Before Installing

Installing the way we have here will only use the default configuration options for this chart. Many times, you will want to customize the chart to use your preferred configuration.

To see what options are configurable on a chart, use helm inspect values:

helm inspect values stable/mariadb

Fetched stable/mariadb-0.3.0.tgz to /Users/mattbutcher/Code/Go/src/k8s.io/helm/mariadb-0.3.0.tgz

## Bitnami MariaDB image version

## ref: https://hub.docker.com/r/bitnami/mariadb/tags/

##

## Default: none

imageTag: 10.1.14-r3

## Specify a imagePullPolicy

## Default to 'Always' if imageTag is 'latest', else set to 'IfNotPresent'

## ref: http://kubernetes.io/docs/user-guide/images/#pre-pulling-images

##

# imagePullPolicy:

## Specify password for root user

## ref: https://github.com/bitnami/bitnami-docker-mariadb/blob/master/README.md#setting-the-root-password-on-first-run

##

# mariadbRootPassword:

## Create a database user

## ref: https://github.com/bitnami/bitnami-docker-mariadb/blob/master/README.md#creating-a-database-user-on-first-run

##

# mariadbUser:

# mariadbPassword:

## Create a database

## ref: https://github.com/bitnami/bitnami-docker-mariadb/blob/master/README.md#creating-a-database-on-first-run

##

# mariadbDatabase:

You can then override any of these settings in a YAML formatted file, and then pass that file during installation.

$ echo '{mariadbUser: user0, mariadbDatabase: user0db}' > config.yaml

$ helm install -f config.yaml stable/mariadb

The above will create a default MariaDB user with the name user0, and grant this user access to a newly created user0db database, but will accept all the rest of the defaults for that chart.

There are two ways to pass configuration data during install:

--values(or-f): Specify a YAML file with overrides. This can be specified multiple times and the rightmost file will take precedence--set: Specify overrides on the command line.

If both are used, --set values are merged into --values with higher precedence.

The Format and Limitations of --set

The --set option takes zero or more name/value pairs. At its simplest, it is used like this: --set name=value. The YAML equivalent of that is:

name: value

Multiple values are separated by , characters. So --set a=b,c=d becomes:

a: b

c: d

More complex expressions are supported. For example, --set outer.inner=value is translated into this:

outer:

inner: value

Lists can be expressed by enclosing values in { and }. For example, --set name={a, b, c} translates to:

name:

- a

- b

- c

As of Helm 2.5.0, it is possible to access list items using an array index syntax. For example, --set servers[0].port=80 becomes:

servers:

- port: 80

Multiple values can be set this way. The line --set servers[0].port=80,servers[0].host=example becomes:

servers:

- port: 80

host: example

Sometimes you need to use special characters in your --set lines. You can use a backslash to escape the characters; --set name=value1,value2 will become:

name: "value1,value2"

Similarly, you can escape dot sequences as well, which may come in handy when charts use the toYaml function to parse annotations, labels and node selectors. The syntax for --set nodeSelector."kubernetes.io/role"=master becomes:

nodeSelector:

kubernetes.io/role: master

Deeply nested data structures can be difficult to express using --set. Chart designers are encouraged to consider the --set usage when designing the format of a values.yaml file.

More Installation Methods

The helm install command can install from several sources:

- A chart repository (as we’ve seen above)

- A local chart archive (

helm install foo-0.1.1.tgz) - An unpacked chart directory (

helm install path/to/foo) - A full URL (

helm install https://example.com/charts/foo-1.2.3.tgz)

‘HELM UPGRADE’ AND ‘HELM ROLLBACK’: UPGRADING A RELEASE, AND RECOVERING ON FAILURE

When a new version of a chart is released, or when you want to change the configuration of your release, you can use the helm upgrade command.

An upgrade takes an existing release and upgrades it according to the information you provide. Because Kubernetes charts can be large and complex, Helm tries to perform the least invasive upgrade. It will only update things that have changed since the last release.

$ helm upgrade -f panda.yaml happy-panda stable/mariadb

Fetched stable/mariadb-0.3.0.tgz to /Users/mattbutcher/Code/Go/src/k8s.io/helm/mariadb-0.3.0.tgz

happy-panda has been upgraded. Happy Helming!

Last Deployed: Wed Sep 28 12:47:54 2016

Namespace: default

Status: DEPLOYED

...

In the above case, the happy-panda release is upgraded with the same chart, but with a new YAML file:

mariadbUser: user1

We can use helm get values to see whether that new setting took effect.

$ helm get values happy-panda

mariadbUser: user1

The helm get command is a useful tool for looking at a release in the cluster. And as we can see above, it shows that our new values from panda.yaml were deployed to the cluster.

Now, if something does not go as planned during a release, it is easy to roll back to a previous release using helm rollback [RELEASE] [REVISION].

$ helm rollback happy-panda 1

The above rolls back our happy-panda to its very first release version. A release version is an incremental revision. Every time an install, upgrade, or rollback happens, the revision number is incremented by 1. The first revision number is always 1. And we can use helm history [RELEASE] to see revision numbers for a certain release.

HELPFUL OPTIONS FOR INSTALL/UPGRADE/ROLLBACK

There are several other helpful options you can specify for customizing the behavior of Helm during an install/upgrade/rollback. Please note that this is not a full list of cli flags. To see a description of all flags, just run helm <command> --help.

--timeout: A value in seconds to wait for Kubernetes commands to complete This defaults to 300 (5 minutes)--wait: Waits until all Pods are in a ready state, PVCs are bound, Deployments have minimum (DesiredminusmaxUnavailable) Pods in ready state and Services have an IP address (and Ingress if aLoadBalancer) before marking the release as successful. It will wait for as long as the--timeoutvalue. If timeout is reached, the release will be marked asFAILED.

Note: In scenario where Deployment has replicas set to 1 and maxUnavailable is not set to 0 as part of rolling update strategy, --wait will return as ready as it has satisfied the minimum Pod in ready condition. – --no-hooks: This skips running hooks for the command – --recreate-pods (only available for upgrade and rollback): This flag will cause all pods to be recreated (with the exception of pods belonging to deployments)

‘HELM DELETE’: DELETING A RELEASE

When it is time to uninstall or delete a release from the cluster, use the helm deletecommand:

$ helm delete happy-panda

This will remove the release from the cluster. You can see all of your currently deployed releases with the helm list command:

$ helm list

NAME VERSION UPDATED STATUS CHART

inky-cat 1 Wed Sep 28 12:59:46 2016 DEPLOYED alpine-0.1.0

From the output above, we can see that the happy-panda release was deleted.

However, Helm always keeps records of what releases happened. Need to see the deleted releases? helm list --deleted shows those, and helm list --all shows all of the releases (deleted and currently deployed, as well as releases that failed):

⇒ helm list --all

NAME VERSION UPDATED STATUS CHART

happy-panda 2 Wed Sep 28 12:47:54 2016 DELETED mariadb-0.3.0

inky-cat 1 Wed Sep 28 12:59:46 2016 DEPLOYED alpine-0.1.0

kindred-angelf 2 Tue Sep 27 16:16:10 2016 DELETED alpine-0.1.0

Because Helm keeps records of deleted releases, a release name cannot be re-used. (If you really need to re-use a release name, you can use the --replace flag, but it will simply re-use the existing release and replace its resources.)

Note that because releases are preserved in this way, you can rollback a deleted resource, and have it re-activate.

‘HELM REPO’: WORKING WITH REPOSITORIES

So far, we’ve been installing charts only from the stable repository. But you can configure helm to use other repositories. Helm provides several repository tools under the helm repocommand.

You can see which repositories are configured using helm repo list:

$ helm repo list

NAME URL

stable https://kubernetes-charts.storage.googleapis.com

local http://localhost:8879/charts

mumoshu https://mumoshu.github.io/charts

And new repositories can be added with helm repo add:

$ helm repo add dev https://example.com/dev-charts

Because chart repositories change frequently, at any point you can make sure your Helm client is up to date by running helm repo update.

CREATING YOUR OWN CHARTS

The Chart Development Guide explains how to develop your own charts. But you can get started quickly by using the helm create command:

$ helm create deis-workflow

Creating deis-workflow

Now there is a chart in ./deis-workflow. You can edit it and create your own templates.

As you edit your chart, you can validate that it is well-formatted by running helm lint.

When it’s time to package the chart up for distribution, you can run the helm packagecommand:

$ helm package deis-workflow

deis-workflow-0.1.0.tgz

And that chart can now easily be installed by helm install:

$ helm install ./deis-workflow-0.1.0.tgz

...

Charts that are archived can be loaded into chart repositories. See the documentation for your chart repository server to learn how to upload.

Note: The stable repository is managed on the Kubernetes Charts GitHub repository. That project accepts chart source code, and (after audit) packages those for you.

TILLER, NAMESPACES AND RBAC

In some cases you may wish to scope Tiller or deploy multiple Tillers to a single cluster. Here are some best practices when operating in those circumstances.

- Tiller can be installed into any namespace. By default, it is installed into kube-system. You can run multiple Tillers provided they each run in their own namespace.

- Limiting Tiller to only be able to install into specific namespaces and/or resource types is controlled by Kubernetes RBAC roles and rolebindings. You can add a service account to Tiller when configuring Helm via

helm init --service-acount <NAME>. You can find more information about that here. - Release names are unique PER TILLER INSTANCE.

- Charts should only contain resources that exist in a single namespace.

- It is not recommended to have multiple Tillers configured to manage resources in the same namespace.

CONCLUSION

This chapter has covered the basic usage patterns of the helm client, including searching, installation, upgrading, and deleting. It has also covered useful utility commands like helm status, helm get, and helm repo.

For more information on these commands, take a look at Helm’s built-in help: helm help.

In the next chapter, we look at the process of developing charts.

+++ aliases = [ “plugins.md”, “docs/plugins.md”, “using_helm/plugins.md”, “developing_charts/plugins.md” ] +++

The Helm Plugins Guide

Helm 2.1.0 introduced the concept of a client-side Helm plugin. A plugin is a tool that can be accessed through the helm CLI, but which is not part of the built-in Helm codebase.

Existing plugins can be found on related section or by searching Github.

This guide explains how to use and create plugins.

AN OVERVIEW

Helm plugins are add-on tools that integrate seamlessly with Helm. They provide a way to extend the core feature set of Helm, but without requiring every new feature to be written in Go and added to the core tool.

Helm plugins have the following features:

- They can be added and removed from a Helm installation without impacting the core Helm tool.

- They can be written in any programming language.

- They integrate with Helm, and will show up in

helm helpand other places.

Helm plugins live in $(helm home)/plugins.

The Helm plugin model is partially modeled on Git’s plugin model. To that end, you may sometimes hear helm referred to as the porcelain layer, with plugins being the plumbing. This is a shorthand way of suggesting that Helm provides the user experience and top level processing logic, while the plugins do the “detail work” of performing a desired action.

INSTALLING A PLUGIN

A Helm plugin management system is in the works. But in the short term, plugins are installed by copying the plugin directory into $(helm home)/plugins.

$ cp -a myplugin/ $(helm home)/plugins/

If you have a plugin tar distribution, simply untar the plugin into the $(helm home)/pluginsdirectory.

BUILDING PLUGINS

In many ways, a plugin is similar to a chart. Each plugin has a top-level directory, and then a plugin.yaml file.

$(helm home)/plugins/

|- keybase/

|

|- plugin.yaml

|- keybase.sh

In the example above, the keybase plugin is contained inside of a directory named keybase. It has two files: plugin.yaml (required) and an executable script, keybase.sh (optional).

The core of a plugin is a simple YAML file named plugin.yaml. Here is a plugin YAML for a plugin that adds support for Keybase operations:

name: "keybase"

version: "0.1.0"

usage: "Integrate Keybase.io tools with Helm"

description: |-

This plugin provides Keybase services to Helm.

ignoreFlags: false

useTunnel: false

command: "$HELM_PLUGIN_DIR/keybase.sh"

The name is the name of the plugin. When Helm executes it plugin, this is the name it will use (e.g. helm NAME will invoke this plugin).

name should match the directory name. In our example above, that means the plugin with name: keybase should be contained in a directory named keybase.

Restrictions on name:

namecannot duplicate one of the existinghelmtop-level commands.namemust be restricted to the characters ASCII a-z, A-Z, 0-9,_and-.

version is the SemVer 2 version of the plugin. usage and description are both used to generate the help text of a command.

The ignoreFlags switch tells Helm to not pass flags to the plugin. So if a plugin is called with helm myplugin --foo and ignoreFlags: true, then --foo is silently discarded.

The useTunnel switch indicates that the plugin needs a tunnel to Tiller. This should be set to true anytime a plugin talks to Tiller. It will cause Helm to open a tunnel, and then set $TILLER_HOST to the right local address for that tunnel. But don’t worry: if Helm detects that a tunnel is not necessary because Tiller is running locally, it will not create the tunnel.

Finally, and most importantly, command is the command that this plugin will execute when it is called. Environment variables are interpolated before the plugin is executed. The pattern above illustrates the preferred way to indicate where the plugin program lives.

There are some strategies for working with plugin commands:

- If a plugin includes an executable, the executable for a

command:should be packaged in the plugin directory. - The

command:line will have any environment variables expanded before execution.$HELM_PLUGIN_DIRwill point to the plugin directory. - The command itself is not executed in a shell. So you can’t oneline a shell script.

- Helm injects lots of configuration into environment variables. Take a look at the environment to see what information is available.

- Helm makes no assumptions about the language of the plugin. You can write it in whatever you prefer.

- Commands are responsible for implementing specific help text for

-hand--help. Helm will useusageanddescriptionforhelm helpandhelm help myplugin, but will not handlehelm myplugin --help.

ENVIRONMENT VARIABLES

When Helm executes a plugin, it passes the outer environment to the plugin, and also injects some additional environment variables.

Variables like KUBECONFIG are set for the plugin if they are set in the outer environment.

The following variables are guaranteed to be set:

HELM_PLUGIN: The path to the plugins directoryHELM_PLUGIN_NAME: The name of the plugin, as invoked byhelm. Sohelm myplugwill have the short namemyplug.HELM_PLUGIN_DIR: The directory that contains the plugin.HELM_BIN: The path to thehelmcommand (as executed by the user).HELM_HOME: The path to the Helm home.HELM_PATH_*: Paths to important Helm files and directories are stored in environment variables prefixed byHELM_PATH.TILLER_HOST: Thedomain:portto Tiller. If a tunnel is created, this will point to the local endpoint for the tunnel. Otherwise, it will point to$HELM_HOST,--host, or the default host (according to Helm’s rules of precedence).

While HELM_HOST may be set, there is no guarantee that it will point to the correct Tiller instance. This is done to allow plugin developer to access HELM_HOST in its raw state when the plugin itself needs to manually configure a connection.

A NOTE ON USETUNNEL

If a plugin specifies useTunnel: true, Helm will do the following (in order):

- Parse global flags and the environment

- Create the tunnel

- Set

TILLER_HOST - Execute the plugin

- Close the tunnel

The tunnel is removed as soon as the command returns. So, for example, a command cannot background a process and assume that that process will be able to use the tunnel.

A NOTE ON FLAG PARSING

When executing a plugin, Helm will parse global flags for its own use. Some of these flags are not passed on to the plugin.

--debug: If this is specified,$HELM_DEBUGis set to1--home: This is converted to$HELM_HOME--host: This is converted to$HELM_HOST--kube-context: This is simply dropped. If your plugin usesuseTunnel, this is used to set up the tunnel for you.

Plugins should display help text and then exit for -h and --help. In all other cases, plugins may use flags as appropriate.

Serverless Architectures: Monoliths, Nanoservices, Microservices & Hybrids

The Monolith

Whenever I hear “monolith”, I think of a massive LAMP project with a single, burning hot MySQL database.

.png)

(not always the case). The monolith architecture looks something like this in Serverless:

I.e. all requests to go to a single Lambda function, app.js. Users and games have nothing to do with one another but the application logic for users and games are in the same Lambda function.

Pros

We found that the greatest advantage that the monolith had over nanoservices and microservices was speed of deployment. With nanoservices and microservices, you have to deploy multiple copies of dependant node_modules (with Node.js) and any library code that your functions share which can be slow. With the monolith, it’s a single function deployment to all API endpoints so deployment is faster. On the other hand, how common is it to want to deploy all endpoints…

Cons

This architecture in Serverless, has similar drawbacks to the monolithic architecture in general:

- Tighter coupling. In the example above, app.js is responsible for both users and games. If a bug gets introduced into the users part of the function, it’s more likely that the games part might break too

- Complexity. If all application logic is in a single function, the function can get more complicated which can slow down development and make it easier to introduce bugs

Microservices

Microservices in Serverless looks something like this:

.png)

Pros

The advantages of the microservices architecture in Serverless inherits advantages of microservices in general. A couple:

- Separation of concerns. If a bug has been introduced into games.js, calls to users.js should carry on working. Of course, maybe GET /users/:id might contact the games microservice to get games that the user plays but if users.js and games.js are proper microservices then users.js should handle games.js failing and vice versa

- Less complexity. Adding additional functionality to games.js doesn’t make the users.js codebase any more complicated

Cons

As mentioned above with the monolith, microservices in Serverless can result in slower deployments.

Nanoservices

Nanoservices take microservices to the extreme – one function per endpoint instead of one per resource:

.png)

Pros

Nanoservices take the advantages of microservices and amplifies them. Separation of concerns is greater – get_users.js is probably going to be simpler than users.js that handles everything to do with a user.

Cons

Again, similar to microservices but even more so – the number of functions to deploy can get huge so deployment time can increase.

Hybrid

There is nothing to stop Developers taking a hybrid approach to their architecture e.g. a mixture of nanoservices and microservices. In our example, if there was a lot of game functionality, it might make sense to split the functionality into nanoservices but if is less functionality related to users, microservices could be more appropriate:

.png)

If you have any examples of how you’re architecting your Serverless projects, tell us about it!

Docker – Ubuntu – bash: ping: command not found

Docker images are pretty minimal, But you can install ping in your official ubuntu docker image via:

apt-get update

apt-get install iputils-ping

Chances are you dont need ping your image, and just want to use it for testing purposes. Above example will help you out.

But if you need ping to exist on your image, you can create a Dockerfile or commit the container you ran the above commands in to a new image.

Commit:

docker commit -m "Installed iputils-ping" --author "Your Name <name@domain.com>" ContainerNameOrId yourrepository/imagename:tag

Dockerfile:

FROM ubuntu

RUN apt-get update && apt-get install -y iputils-ping

CMD bashKubernetes Issues

- The pods in kubernetes are in pending state when we execute kubectl get pods

Execute the following command to see the root cause:

kubectl get events

You will see output as follows:

LAST SEEN FIRST SEEN COUNT NAME KIND SUBOBJECT TYPE REASON SOURCE MESSAG E

1m 14h 3060 hello-nginx-5d47cdc4b7-8btwf.14ecd67c4676131c Pod Warning FailedScheduling default-scheduler No nod es are available that match all of the predicates: PodToleratesNodeTaints (1).This error usually comes when we try to create pod on the master node:

Execute the following command:kubectl taint nodes <nodeName> node-role.kubernetes.io/master:NoSchedule- - helm install stable/mysql: Error: no available release name found

Execute the helm ls command to get the root cause:

The error I received is

Error: configmaps is forbidden: User “system:serviceaccount:kube-system:default” cannot list configmaps in the namespace “kube-system”

The default serviceaccount does not have API permissions. Helm likely needs to be assigned a service account, and that service account given API permissions.

The commands used to solve are:kubectl create serviceaccount --namespace kube-system tiller kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}' helm init --service-account tiller --upgradeAfter that if you get the following error: Error: forwarding ports: error upgrading connection: unable to upgrade connection: pod not found (“tiller-deploy-cffb976df-m5z6f_kube-system”)

Then execute helm init –upgrade - kubernetes pods keep crashing with “CrashLoopBackOff” but I can’t find any logI had the need to keep a pod running for subsequent kubectl exec calls and as the comments above pointed out my pod was getting killed by my k8s cluster because it had completed running all its tasks. I managed to keep my pod running by simply kicking the pod with a command that would not stop automatically as in:

kubectl run YOUR_POD_NAME -n YOUR_NAMESPACE --image SOME_PUBLIC_IMAGE:latest --command tailf /dev/null - Create busybox kubernetes pod

kubectl run -i –tty busybox –image=busybox –restart=Never — sh - Kubernetes pods cannot connect to internet kubeadm:

If your pods cannot connect to the internet, you caan check the following:

Spin up a busybox

Execute: ping 8.8.8.8

ping google.com

route -n You will get an ip for gateway. Check if you can ping the gateway

In the kubernetes master node check the ip of kube-dns pod with command:

kubectl get pods -n kube-system -o wide | grep kube-dns this will return an IP in output. In your pod container check if this IP is present as nameserver.

ifconfig note the IP address range assigned to the container.

In the kubernetes master node execute ifconfig check that the IP address noted previously belong to which bridge’s IP range.

If it belongs to some other interface than expected you can check it by executing:

brctl show check if the bridge has an interface attached to it.

If not this is the reason the pods do not have an internet connection.

You can attach the interface with this command:

brctl addif mybridge eth0

This issue can be in the weave network, try to do a kubeadm reset and add a flannel network

Install kubernetes on Centos/RHEL 7

Kubernetes is a cluster and orchestration engine for docker containers. In other words Kubernetes is an open source software or tool which is used to orchestrate and manage docker containers in cluster environment. Kubernetes is also known as k8s and it was developed by Google and donated to “Cloud Native Computing foundation”

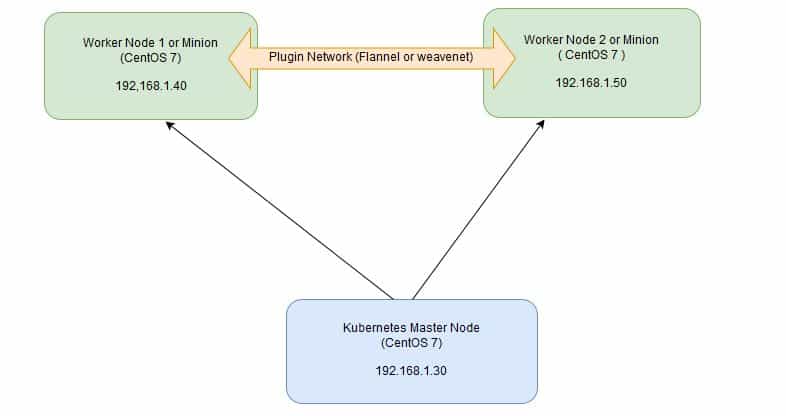

In Kubernetes setup we have one master node and multiple nodes. Cluster nodes is known as worker node or Minion. From the master node we manage the cluster and its nodes using ‘kubeadm‘ and ‘kubectl‘ command.

Kubernetes can be installed and deployed using following methods:

- Minikube ( It is a single node kubernetes cluster)

- Kops ( Multi node kubernetes setup into AWS )

- Kubeadm ( Multi Node Cluster in our own premises)

In this article we will install latest version of Kubernetes 1.7 on CentOS 7 / RHEL 7 with kubeadm utility. In my setup I am taking three CentOS 7 servers with minimal installation. One server will acts master node and rest two servers will be minion or worker nodes.

On the Master Node following components will be installed

- API Server – It provides kubernetes API using Jason / Yaml over http, states of API objects are stored in etcd

- Scheduler – It is a program on master node which performs the scheduling tasks like launching containers in worker nodes based on resource availability

- Controller Manager – Main Job of Controller manager is to monitor replication controllers and create pods to maintain desired state.

- etcd – It is a Key value pair data base. It stores configuration data of cluster and cluster state.

- Kubectl utility – It is a command line utility which connects to API Server on port 6443. It is used by administrators to create pods, services etc.

On Worker Nodes following components will be installed

- Kubelet – It is an agent which runs on every worker node, it connects to docker and takes care of creating, starting, deleting containers.

- Kube-Proxy – It routes the traffic to appropriate containers based on ip address and port number of the incoming request. In other words we can say it is used for port translation.

- Pod – Pod can be defined as a multi-tier or group of containers that are deployed on a single worker node or docker host.

Installations Steps of Kubernetes 1.7 on CentOS 7 / RHEL 7

Perform the following steps on Master Node

Step 1: Disable SELinux & setup firewall rules

Login to your kubernetes master node and set the hostname and disable selinux using following commands

~]# hostnamectl set-hostname 'k8s-master' ~]# exec bash ~]# setenforce 0 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

Set the following firewall rules.

[root@k8s-master ~]# firewall-cmd --permanent --add-port=6443/tcp [root@k8s-master ~]# firewall-cmd --permanent --add-port=2379-2380/tcp [root@k8s-master ~]# firewall-cmd --permanent --add-port=10250/tcp [root@k8s-master ~]# firewall-cmd --permanent --add-port=10251/tcp [root@k8s-master ~]# firewall-cmd --permanent --add-port=10252/tcp [root@k8s-master ~]# firewall-cmd --permanent --add-port=10255/tcp [root@k8s-master ~]# firewall-cmd --reload [root@k8s-master ~]# echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables

Note: In case you don’t have your own dns server then update /etc/hosts file on master and worker nodes

192.168.1.30 k8s-master 192.168.1.40 worker-node1 192.168.1.50 worker-node2

Step 2: Configure Kubernetes Repository

Kubernetes packages are not available in the default CentOS 7 & RHEL 7 repositories, Use below command to configure its package repositories.

[root@k8s-master ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo > [kubernetes] > name=Kubernetes > baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 > enabled=1 > gpgcheck=1 > repo_gpgcheck=1 > gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg > https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg > EOF [root@k8s-master ~]#

Step 3: Install Kubeadm and Docker

Once the package repositories are configured, run the beneath command to install kubeadm and docker packages.

[root@k8s-master ~]# yum install kubeadm docker -y

Start and enable kubectl and docker service

[root@k8s-master ~]# systemctl restart docker && systemctl enable docker [root@k8s-master ~]# systemctl restart kubelet && systemctl enable kubelet

Step 4: Initialize Kubernetes Master with ‘kubeadm init’

Run the beneath command to initialize and setup kubernetes master.

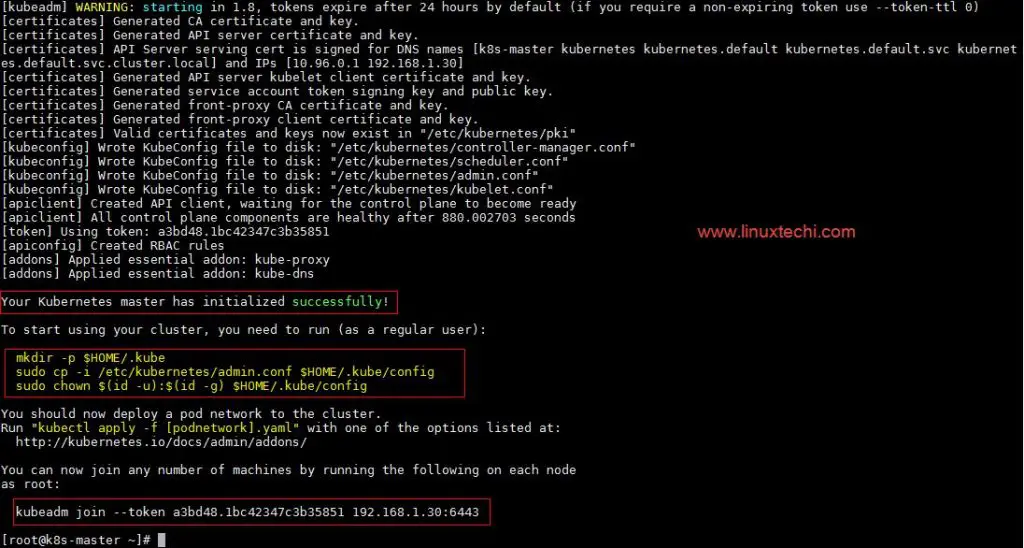

[root@k8s-master ~]# kubeadm init

Output of above command would be something like below

As we can see in the output that kubernetes master has been initialized successfully. Execute the beneath commands to use the cluster as root user.

[root@k8s-master ~]# mkdir -p $HOME/.kube [root@k8s-master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master ~]# chown $(id -u):$(id -g) $HOME/.kube/config

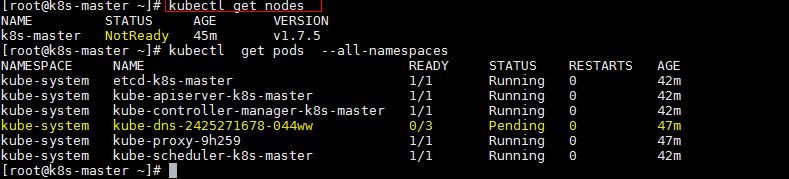

Step 5: Deploy pod network to the cluster

Try to run below commands to get status of cluster and pods.

To make the cluster status ready and kube-dns status running, deploy the pod network so that containers of different host communicated each other. POD network is the overlay network between the worker nodes.

Run the beneath command to deploy network.

[root@k8s-master ~]# export kubever=$(kubectl version | base64 | tr -d 'n') [root@k8s-master ~]# kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$kubever" serviceaccount "weave-net" created clusterrole "weave-net" created clusterrolebinding "weave-net" created daemonset "weave-net" created [root@k8s-master ~]#

Now run the following commands to verify the status

[root@k8s-master ~]# kubectl get nodes NAME STATUS AGE VERSION k8s-master Ready 1h v1.7.5 [root@k8s-master ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system etcd-k8s-master 1/1 Running 0 57m kube-system kube-apiserver-k8s-master 1/1 Running 0 57m kube-system kube-controller-manager-k8s-master 1/1 Running 0 57m kube-system kube-dns-2425271678-044ww 3/3 Running 0 1h kube-system kube-proxy-9h259 1/1 Running 0 1h kube-system kube-scheduler-k8s-master 1/1 Running 0 57m kube-system weave-net-hdjzd 2/2 Running 0 7m [root@k8s-master ~]#

Now let’s add worker nodes to the Kubernetes master nodes.

Perform the following steps on each worker node

Step 1: Disable SELinux & configure firewall rules on both the nodes

Before disabling SELinux set the hostname on the both nodes as ‘worker-node1’ and ‘worker-node2’ respectively

~]# setenforce 0 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux ~]# firewall-cmd --permanent --add-port=10250/tcp ~]# firewall-cmd --permanent --add-port=10255/tcp ~]# firewall-cmd --permanent --add-port=30000-32767/tcp ~]# firewall-cmd --permanent --add-port=6783/tcp ~]# firewall-cmd --reload ~]# echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables

Step 2: Configure Kubernetes Repositories on both worker nodes

~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo > [kubernetes] > name=Kubernetes > baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 > enabled=1 > gpgcheck=1 > repo_gpgcheck=1 > gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg > https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg > EOF

Step 3: Install kubeadm and docker package on both nodes

[root@worker-node1 ~]# yum install kubeadm docker -y [root@worker-node2 ~]# yum install kubeadm docker -y

Start and enable docker service

[root@worker-node1 ~]# systemctl restart docker && systemctl enable docker [root@worker-node2 ~]# systemctl restart docker && systemctl enable docker

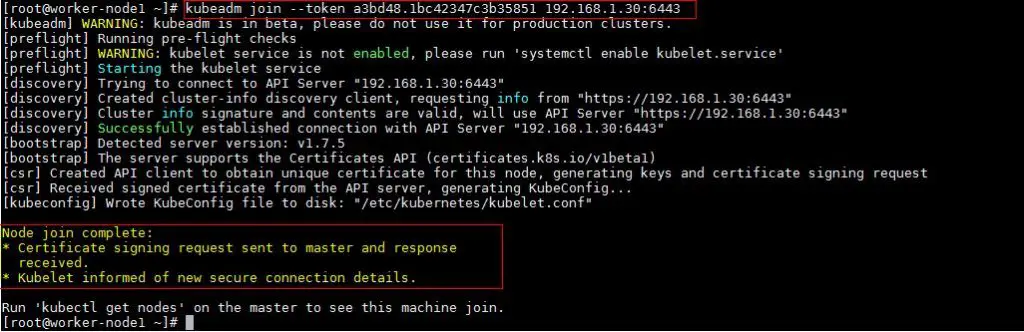

Step 4: Now Join worker nodes to master node

To join worker nodes to Master node, a token is required. Whenever kubernetes master initialized , then in the output we get command and token. Copy that command and run on both nodes.

[root@worker-node1 ~]# kubeadm join --token a3bd48.1bc42347c3b35851 192.168.1.30:6443

Output of above command would be something like below

[root@worker-node2 ~]# kubeadm join --token a3bd48.1bc42347c3b35851 192.168.1.30:6443

Output would be something like below

Now verify Nodes status from master node using kubectl command

[root@k8s-master ~]# kubectl get nodes NAME STATUS AGE VERSION k8s-master Ready 2h v1.7.5 worker-node1 Ready 20m v1.7.5 worker-node2 Ready 18m v1.7.5 [root@k8s-master ~]#

As we can see master and worker nodes are in ready status. This concludes that kubernetes 1.7 has been installed successfully and also we have successfully joined two worker nodes. Now we can create pods and services.

Installing Kubernetes on your Windows with Minikube

Personally I think if you are looking for a container management solution in today’s world, you have to invest your time in Kubernetes (k8s). There is no doubt about that because of multiple factors. To the best of my undestanding, these points include:

- Kubernetes is Open Source

- Great momentum in terms of activities & contribution at its Open Source Project

- Decades of experience running its predecessor at Google

- Support of multiple OS and infrastructure software vendors

- Rate at which features are being released

- Production readiness (Damn it, Pokemon Go met its scale due to Kubernetes)

- Number of features available. Check out the list of features at the home page.

The general perception about a management solution like Kubernetes is that it would require quite a bit of setup for you to try it out locally. What this means is that it would take some time to set it up but more than setting it up, you might probably get access to it only during staging phase or something like that. Ideally you want a similar environment in your development too, so that you are as close to what it takes to run your application. The implications of this is that you want it running on your laptop/desktop, where you are likely to do your development.

This was the goal behind the minikube project and the team has put in fantastic effort to help us setup and run Kubernetes on our development machines. This is as simple and portable as it can get. The tagline of minikube project says it all: “Run Kubernetes locally”.

Side Note: The design of the minikube logo makes for interesting reading.

This post is going to take you through setting up Minikube on your Windows development machine and then taking it for a Hello World spin to see a local Kubernetes cluster in action. Along the way, I will highlight my environment and what I had to do to get the experimental build of minikube working on my Windows machine. Yes, it is experimental software, but it works!

If you are not on Windows, the instructions to setup minikube on either your Linux machine or Mac machine are also available here. Check it out. You can then safely skip over the setup and go to the section where we do a quick Hello World to test drive Kubernetes locally.

Keep in mind that Minikube gives you a single node cluster that is running in a VM on your development machine.

Of course, once you are done with what you see in this blog, I strongly recommend that you also look at Managed Container Orchestration solutions like Google Container Engine.

Let’s get started now with installation of minikube. But first, we must make sure that our development machine has some of the pre-requisites required to run it. Do not ignore that!

Using VirtualBox and not Hyper-V

VirtualBox and Hyperv (which is available on Windows 10) do not make a happy pair and you are bound to run into situations where the tools get confused. I preferred to use VirtualBox and avoid all esoteric command-line switches that we need to provide to enable creation of the underlying Docker hosts, etc.

To disable Hyper-V, go to Turn Windows features on or off and you will see a dialog with list of Windows features as shown below. Navigate to the Hyper-V section and disable it completely.

This will require a restart to the machine to take effect and on my machine, it even ended up doing a Windows Update, configuring it and a good 10 minutes later, it was back up.

Great! We have everything now to get going.

Development Machine Environment

I am assuming that you have a setup that is similar to this. I believe, you should be fine on Windows 7 too and it would not have the HyperV stuff, instructions of which I will give in a while.

- Windows 10 Laptop. VT-x/AMD-v virtualization must be enabled in BIOS.

- Docker Toolbox v1.12.0. The toolbox sets up VirtualBox and I have gone with that.

- kubectl command line utility. This is the CLI utility for the Kubernetes cluster and you need to install it and have it available in your PATH. To install the latest 1.4 release, do the following: Go to the browser and give the following URL : http://storage.googleapis.com/kubernetes-release/release/v1.8.1/bin/windows/amd64/kubectl.exe. This will download the kubectl CLI executable. Please make it available in the environment PATH variable.

Note: kubectl versions are available at a generic location as per the following format: https://storage.googleapis.com/kubernetes-release/release/${K8S_VERSION}/bin/${GOOS}/${GOARCH}/${K8S_BINARY}

To find the latest kubectl version goto this link: https://storage.googleapis.com/kubernetes-release/release/stable.txt

Minikube installation

The first step is to take the kubectl.exe file that you downloaded in the previous step and place that in the C: folder.

The next step is to download the minikube binary from the following location: https://github.com/kubernetes/minikube/releases

Go to the Windows download link as shown below:

This will start downloading the v0.22.3 release of the executable. The file name is minikube-windows-amd64.exe. Just rename this to minikube.exeand place it in C: drive, alongside the kubectl.exe file from the previous section.

You are all set now to launch a local Kubernetes one-node cluster!

All the steps moving forward are being done in Powershell. Launch Powershell in Administrative mode (Ctrl-Shift-Enter) and navigate to C: drive where the kubectl.exe and minikube.exe files are present.

A few things to note

Let’s do our standard testing to validate our utilities.

If you go to your %HOMEPATH%.minikube folder now, you will notice that several folders got created. Take a look!

There are multiple commands that Minikube supports. You can use the standard ` — help` option to see the list of commands that it has:

PS C:> .minikube --help Minikube is a CLI tool that provisions and manages single-node Kubernetes clusters optimized for development workflows

Usage: minikube [command]

Available Commands: dashboard Opens/displays the kubernetes dashboard URL for your local cluster delete Deletes a local kubernetes cluster. docker-env sets up docker env variables; similar to '$(docker-machine env)' get-k8s-versions Gets the list of available kubernetes versions available for minikube. ip Retrieve the IP address of the running cluster. logs Gets the logs of the running localkube instance, used for debugging minikube, not user code. config Modify minikube config service Gets the kubernetes URL for the specified service in your local cluster ssh Log into or run a command on a machine with SSH; similar to 'docker-machine ssh' start Starts a local kubernetes cluster. status Gets the status of a local kubernetes cluster. stop Stops a running local kubernetes cluster. version Print the version of minikube.

Flags:

--alsologtostderr[=false]: log to standard error as well as files

--log-flush-frequency=5s: Maximum number of seconds between log flushes

--log_backtrace_at=:0: when logging hits line file:N, emit a stack trace

--log_dir="": If non-empty, write log files in this directory

--logtostderr[=false]: log to standard error instead of files

--show-libmachine-logs[=false]: Whether or not to show logs from libmachine.

--stderrthreshold=2: logs at or above this threshold go to stderr

--v=0: log level for V logs

--vmodule=: comma-separated list of pattern=N settings for file-filtered logging

Use "minikube [command] --help" for more information about a command.

I have highlighted a couple of Global flags that you can use in all the commands for minikube. These flags are useful to see what is going on inside the hood at times and also for seeing the output on the standard output (console/command).

Minikube supports multiple versions of Kubernetes and the latest version is v1.7.5. To check out the different versions supported try out the following command: